Row-level security (RLS) is a fine-grained access control mechanism that restricts which rows of data a user or group can access within a table or view. In Databricks, this is commonly implemented using Row Filters.

Row Filters are created as SQL user-defined functions (UDFs) and also incorporate logic from other languages (such as Python) when wrapped in a SQL UDF.

It provides fine-grained access control by dynamically filtering data through conditional expressions and identity functions such as is_account_group_member(group), is_member(group), or other Databricks identity functions.

Creating a Databricks Row Access Policy in PlainID

Ensure that your Databricks app in Orchestration is switched to Manage mode to start building your Row-level Policy in the Policy catalog section. See Managing POPs for more information on how to change the POP Mode.

Row Access Policies in PlainID can be created using the following methods:

- Wizard

- Code

- Native

For more information, refer to Creating Policies.

To create a Row Access Policy with the Policy Wizard:

-

Fill in Policy Details. For more information, refer to the Creating Policies article

-

Fill in Databricks POP Details specific to the vendor Policy:

- Under Vendor Policy Kind dropdown, select Row Access Policy. (Required)

- Under Vendor Policy Name, enter a unique name (in small letters) for the Policy that will appear in the Databricks vendor. (Required)

- Note: The same name can be used across multiple PlainID Policies to define multiple logic statements within a single Databricks Row Access policy (UDF).

- Under Vendor Policy ID, note that the ID is automatically generated. It is the ID used in Policy management operations like deploy, update, and override.

- Under Vendor Policy Order, define the execution order for the logical case statement within the same Vendor Policy ID. (Required)

- Default value is 1. Use this field when enforcing multiple logic conditions for different Identity Groups within the same Policy.

- Under Comment, add any additional information or clarifications about your Policy. (Optional)

- Under Catalog, select the Databricks catalog where the Policy will be deployed.

- Under Schema, select the schema within the catalog where the Policy resides.

- Ensure that your Databricks app in Orchestration is switched to Manage mode to start building your Row-level Policy in the Policy catalog section. See Managing POPs for more information on how to change the POP Mode.

-

Click Continue.

Who Section

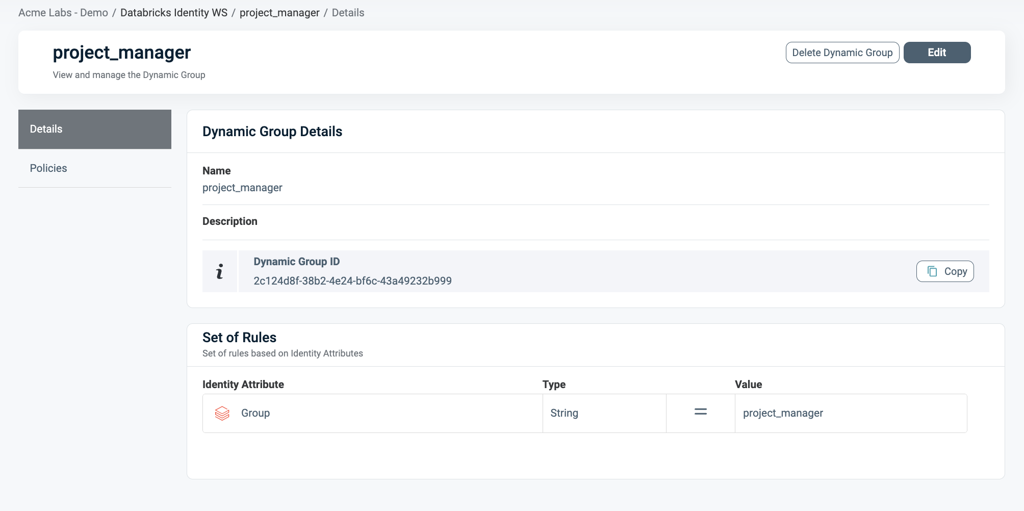

In the Who section, you assign Row-level access by creating a new Dynamic Group or selecting an existing one. You can evaluate multiple Dynamic Groups within a single Row Access Policy, enabling centralized and flexible access control logic.

Define a new Dynamic Group using the available vendor identity functions to grant access based on user identity parameters.

Supported Databricks identity functions include is_account_group_member(group), is_member(group), session_user(), and current_user().

You can use multiple identity functions per Dynamic Group.

To apply your Policy logic to all users, you can select the default "All Users" Dynamic Group.

For information on how to create a Dynamic Group, see Managing Dynamic Groups.

What Section

In the What section, you can define access through your Asset Types.

To define access:

- Click Add Asset Type. Ensure that your Asset Type meets the requirements listed below

- From the dropdown, select an Asset Type.

- Select existing Rulesets for your Policy logic or create a new one. A Policy can include multiple Rulesets, note all combined with logical OR.

- See Defining a Ruleset for Row-level Filtering for more information.

- Click Save.

Asset Types for Row-Level Requirements

Ensure that your Asset Type/s meet the following requirements:

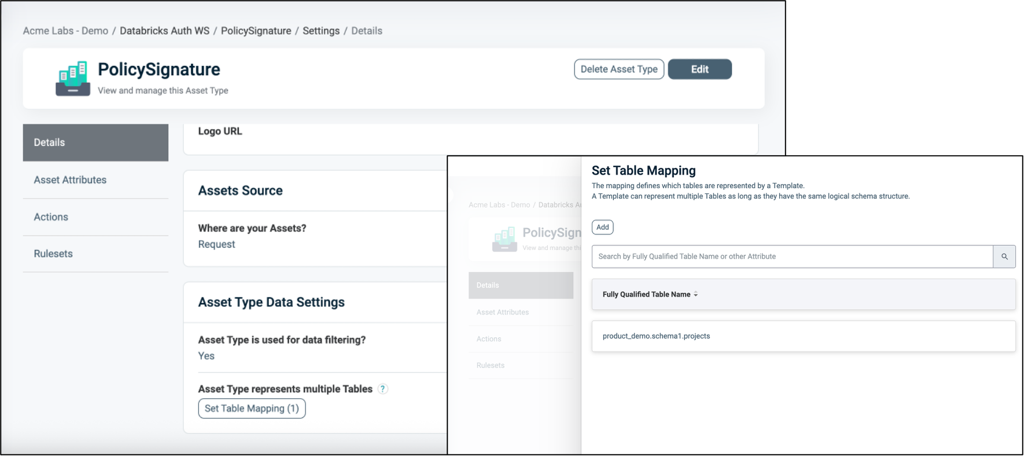

Table Mapping

To set Table Mapping:

- In the Authorization Workspace, click on the Assets & Conditions tab.

- Click on the relevant Asset.

- Refer to Managing Asset Types to learn how to create or edit an Asset Type.

- Ensure that "Is used for data filtering?" is enabled.

- Select Set Table Mapping to link your logical Asset Type with one or more physical Tables or Views in Databricks where the Row Access Policy should apply.

- The mapping must include the fully qualified path (in lower-case) to the table or view, following the format:

catalog_name.schema_name.table_name.

- The mapping must include the fully qualified path (in lower-case) to the table or view, following the format:

For more information about Table Mapping, refer to Dynamic Data Mapping.

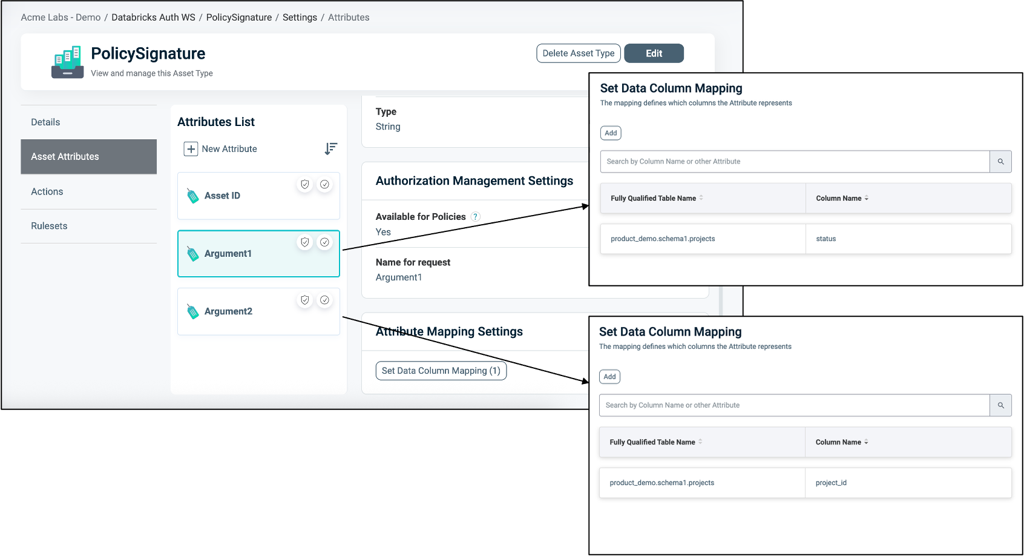

Attribute Mapping

To set Attribute Mapping:

- Choose an existing Attribute or create a new one to use in your filtering logic.

- Refer to Managing Asset Attributes for more details.

- Define the data type (for example, String, Numeric).

- Enable Available for Policies = Yes. These attributes act as placeholders for filtering conditions.

- Click Set Data Column Mapping to map the logical Attributes to one or more physical Databricks column/s (in lower-case).

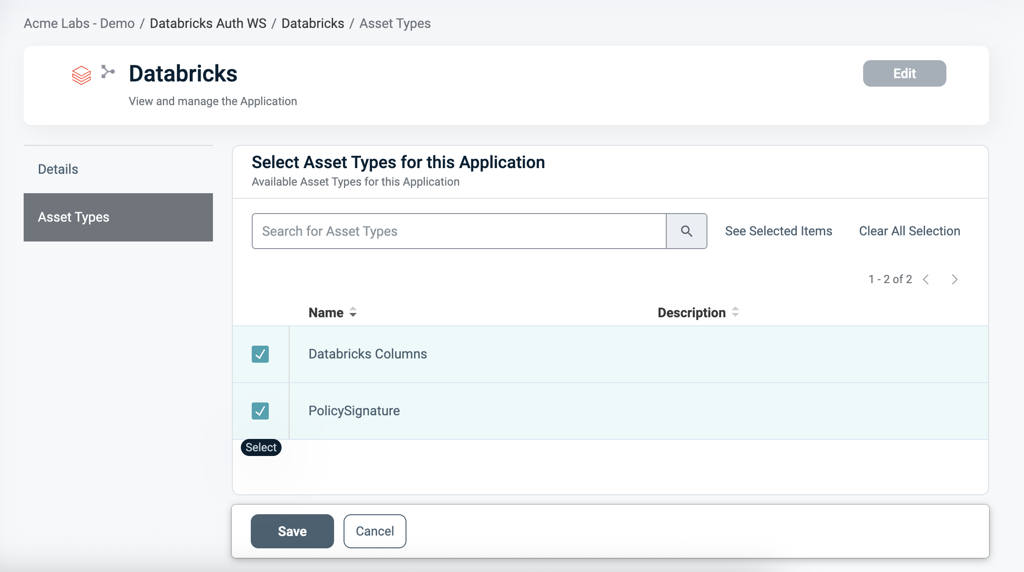

To connect an Asset Type with the Databricks Application:

- Once the Asset Type is created, open the Databricks application.

- Navigate to the Asset Types tab.

- Click Edit.

- Select the relevant Asset Types you want to associate with the Databricks application.

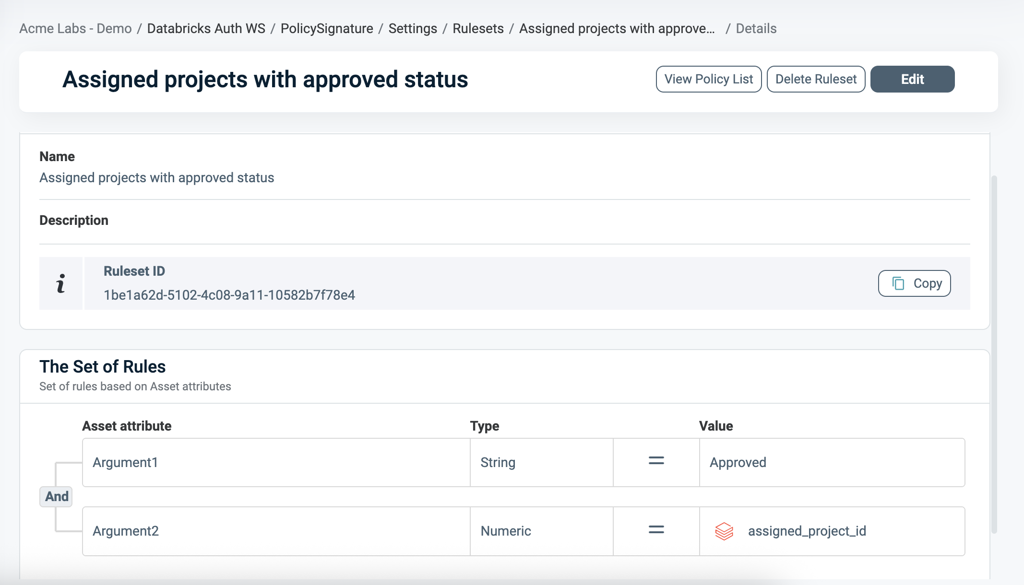

Defining a Ruleset for Row-level Filtering

To define a Ruleset:

In the Asset type within the Ruleset tab, define the Row-level filtering logic using the Asset Attributes you created (for example, Status = 'Active').

You can use Databricks tables as an external Identity Source for contextual or dynamic rules (for example, Project_id = Assigned_project). See our article Managing Identity Source for more information.

Use PlainID's flexible logic to combine operators (=, !=, >, IN, etc.) with complex AND/OR relationships.

When Section

In the When section, you define the Conditions that provide contextual identity data for your Policy, which determine when the Policy applies.

To define a Condition:

- Select an existing Condition or create a new one. A Policy can include multiple Conditions, combined with logical AND meaning all Conditions must be met for the Policy to grant access. Ensure that you select Conditions from Identity Attributes linked to the POP's Identity Source.

- See Managing Conditions to learn how to create a new Identity Attribute Condition.

- Ensure that the Condition meets the Conditions for Row-Level Requirements.

- Click Save.

Conditions for Row-Level Requirements

- Only Conditions based on** Identity Attributes** are supported for use in Row Access Policies.

- Ensure that an Identity Source table is defined within your Policy Orchestration Point (POP).

- Use an Identity Attribute that is mapped to one of the additional Identity Sources associated with the selected POP. For more details, refer to our Managing Identity Source article.

- Within a single Condition, you can only use Attributes from one Source.

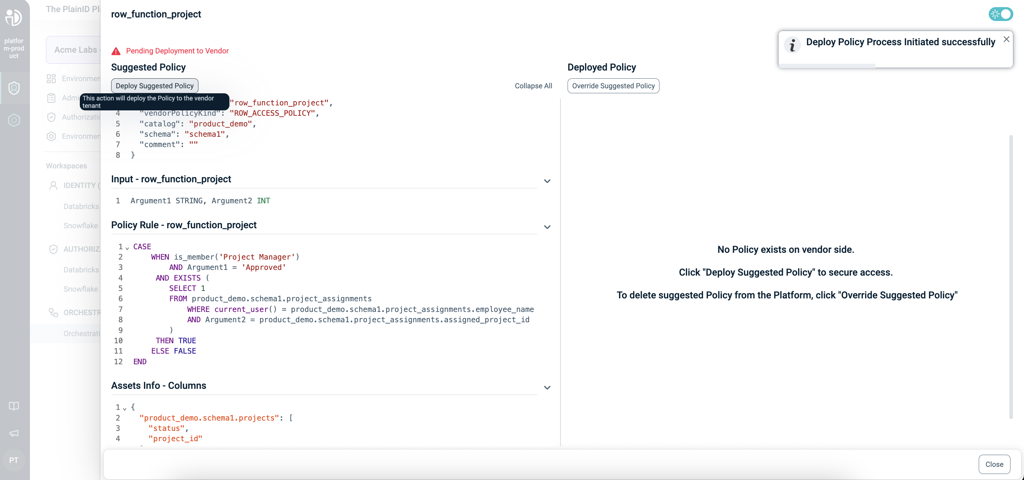

Deploying the Policy

Once complete, navigate to the Orchestration Workspace and deploy the Policy to Databricks.

Databricks enforces the access decision based on the Row-level filtering you've defined in the PlainID platform.

Databricks Row Filters- SQL Function Structure

This table describes the Databricks Row Filters Function components in SQL and compares them to their equivalents in PlainID to help you translate and build your policies easily within the PlainID Platform.

Policy Syntax Table

| Databricks terminology | Databricks Syntax | Description | PlainID terminology |

|---|---|---|---|

| Policy Declaration | CREATE OR REPLACE FUNCTION <Function_name> |

Defines the Function name. The function can be used for Row-level filtering or for column masking. Used to declare or replace an existing Function. | Vendor Policy Name |

| Policy Signature | AS (Argument1 VARCHAR, Argument2 NUMBER...) RETURNS BOOLEAN |

Declares the Policy's input arguments and return type. Arguments are used in the Policy logic to determine access. Returns a BOOLEAN value indicating row visibility. | Policy Signature mapped to Asset Type. Each argument that is used within the Policy logic is created as an Asset Attribute. The Boolean return type defines the Vendor Policy Kind as Row Access Policy |

| Policy Logic | WHEN... |

Incorporates Databricks identity functions: is_account_group_member(group), is_member(group), session_user(), and current_user(). This determines who should receive access in a given context. |

Dynamic Group |

CASE... |

Use SQL expressions such as CASE statements to define the access logic used to evaluate row visibility. | Policy logic uses the Policy arguments mapped into Rulesets, which can be dynamic (based on external identity data) or static (using fixed values). | |

EXISTS (SELECT ... FROM... WHERE...) |

Use SQL subqueries with EXISTS for dynamic, context-based filtering. | Policy logic uses an external table mapped into Identity Attribute conditions, where expressions typically compare attributes to static values. Note that a correlation must be defined using one of the Databricks identity functions (e.g., is_account_group_member(group), is_member(group), session_user(), and current_user(). |

|

| Policy Evaluation | THEN TRUE → Include row, THEN FALSE → Exclude row |

The Function returns a BOOLEAN for each row: TRUE includes the row in the result set; FALSE excludes it. | PlainID centrally manages the Policy rules and identity context. Databricks is responsible for the evaluation. |

| Policy Application | ALTER TABLE 'catalog.schema.table' SET ROW FILTER 'function_name' ON ('column1', 'column2'...); |

Attaches a Row filter Function to a table in Databricks Unity Catalog. The same Policy can be reused across multiple tables. | Defined in the Asset Type Data Settings. You can configure the Tables and Columns associated with the Policy. |

Row Filter Example

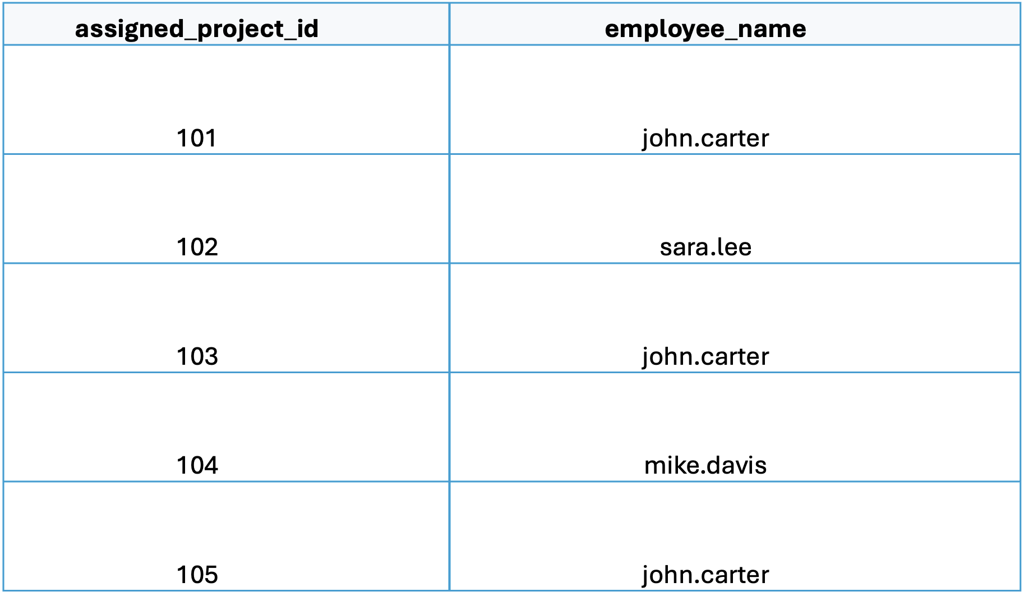

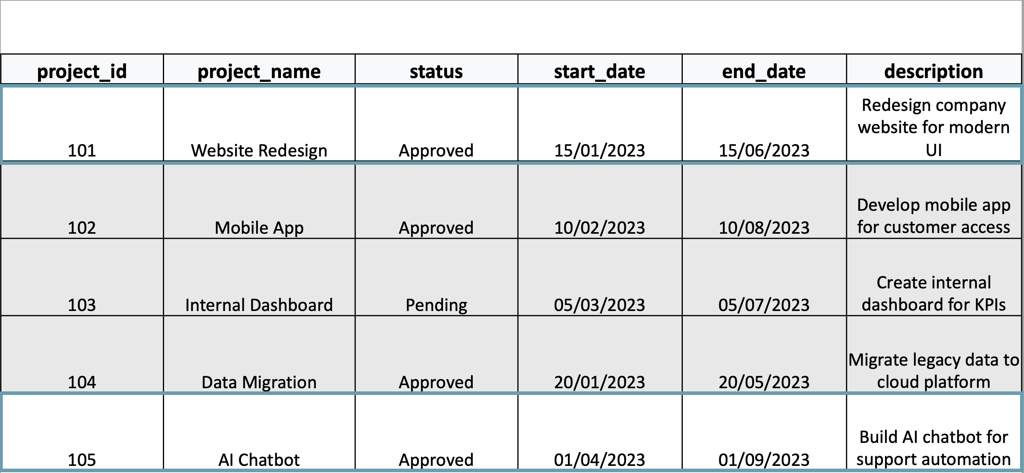

The following example demonstrates a Row Access Policy that allows Project Managers to only see "Approved" projects assigned to them.

CREATE OR REPLACE FUNCTION Row_function_project(Status STRING, project_id int)

RETURNS BOOLEAN

RETURN

CASE

WHEN is_member('project_manager')

AND status = 'Approved'

AND EXISTS (

SELECT 1

FROM project_assignments

WHERE current_user() = employee_name

AND project_id = assigned_project_id

)

THEN TRUE

ELSE FALSE

END;

ALTER TABLE `product_demo`.`schema1`.`projects`

SET ROW FILTER `product_demo`.`schema1`.`Row_function_project`

ON (`status`, `project_id`);

This demonstrates that John is assigned to projects 101, 103, and 105, but can access projects 101 and 105 since project 103 is still pending: