This document provides a practical example of how the PlainID LangChain Authorizer enforces fine-grained, policy-based access control within an AI workflow. We'll explore a specific scenario involving Sara, a Tier-1 support analyst, to demonstrate how PlainID ensures she can only ask permitted questions, access authorized data, and view non-sensitive content, all aligned with enterprise security and compliance standards.

For a comprehensive introduction to the PlainID LangChain Authorizer, refer to the Langchain overview documentation To learn more about integrating this solution into your Environment, visit the Integrating Lanchain Authorizer in PlainID documentation.

Example Use Case: Sara – Tier-1 Support Analyst (EU Region)

Sara, a Tier-1 support analyst based in Germany, has her access governed by organizational policies. Specifically, her permissions are defined as follows:

- Can view support cases categorized as

product_supportandlogin_issues - Cannot access topics like

billingorinternal_security - Limited to case data from the

eu-centralregion - Not permitted to view raw PII, such as

customer_reporter_name

Prerequisites

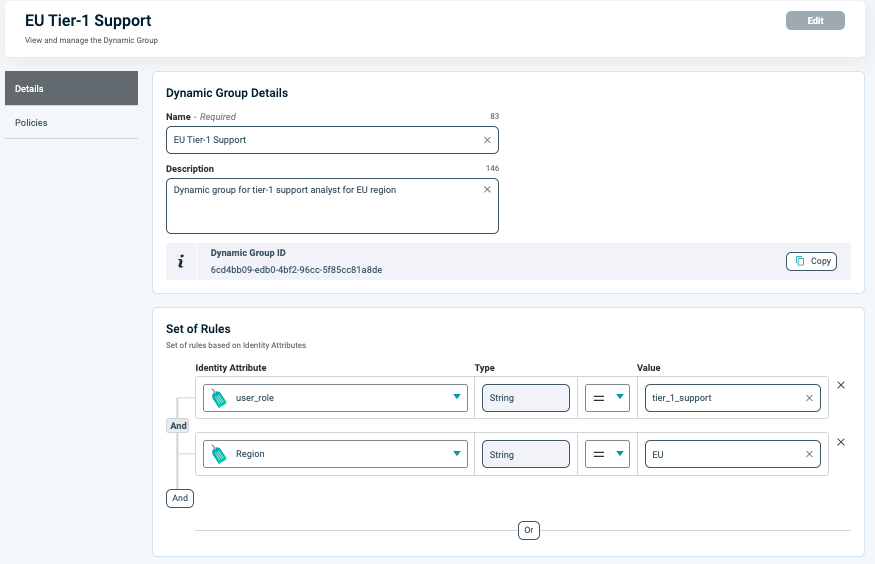

A PlainID Dynamic Group: Before enforcing policies, Sara's identity and attributes (such as role: Tier-1, location: EU, and user ID: sara) must be defined as part of a Dynamic User Group within the PlainID platform.

This user group is then referenced in policies governing:

- Allowed prompt categories (e.g.,

product_support,login_issues) - Accessible data regions (e.g.,

eu-central) - PII visibility rules

This user group is referenced in each guardrail's enforcement logic via the permissions_provider. The following code block shows an initialization example:

from langchain_plainid import PlainIDPermissionsProvider

permissions_provider = PlainIDPermissionsProvider(

base_url="https://plainid-tenant-host",

client_id="plainid-client-id",

client_secret="plainid-client-secret",

entity_id="sara", # The user making the request

entity_type_id="User"

)

Prompt Example

"What product support issues are related to internal security?"

This query tests Sara's access boundaries across three policy-enforced guardrails:

Guardrail 1: Prompt Categorization

Before answering, the agent checks if the question's topic is permitted. Since the query includes "internal security," a restricted topic for Sara, access is denied. As a Tier-1 analyst operating under EU regulations, Sara is not permitted to initiate queries related to sensitive internal domains such as security, finance, or compliance.

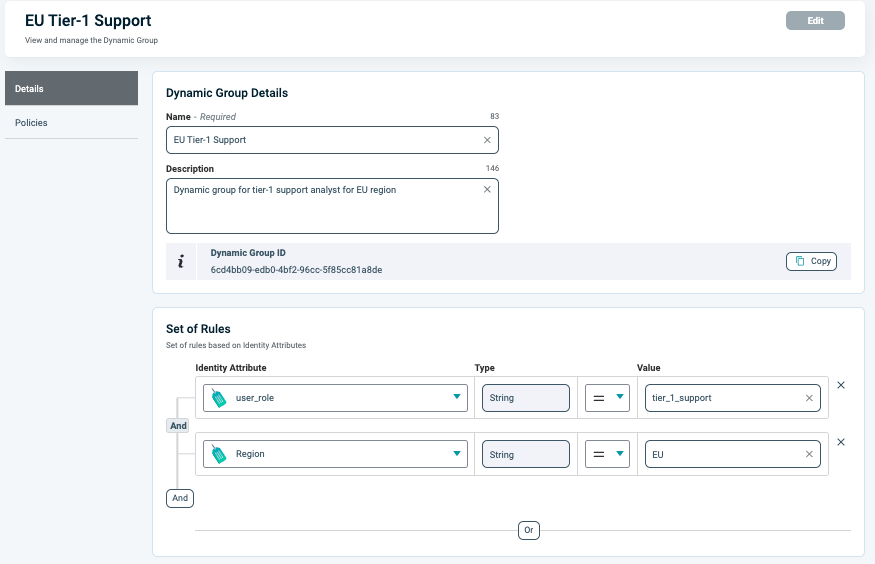

Policy Implementation for Categorizer (Pre-Query)

The following defines Sara's allowed categories (case types):

The image below shows the outcome of the policy evaluation, indicating the categories permitted for Sara.

Enforce Categorization Before Processing the Prompt

from langchain_plainid import PlainIDCategorizer

from langchain_plainid.providers import LLMCategoryClassifierProvider

llm_classifier = LLMCategoryClassifierProvider(llm=llm)

plainid_categorizer = PlainIDCategorizer(

classifier_provider=llm_classifier,

permissions_provider=permissions_provider

)

user_query = "What product support issues are related to internal security??"

try:

category = categorizer.invoke(user_query)

print(f"[Gate 1: ALLOWED] Query categorized as '{category}', proceeding to Retriever.")

except ValueError as e:

print(f"[Gate 1: DENIED] {e}")

print("→ Response:\nYou're not authorized to ask about these topics.")

Guardrail 2: Data Retrieval

If the query is permitted by the categorization Guardrail, a policy-aware retriever fetches only authorized documents (e.g., EU-based incidents).

Policy Implementation for Data Retrieval

-

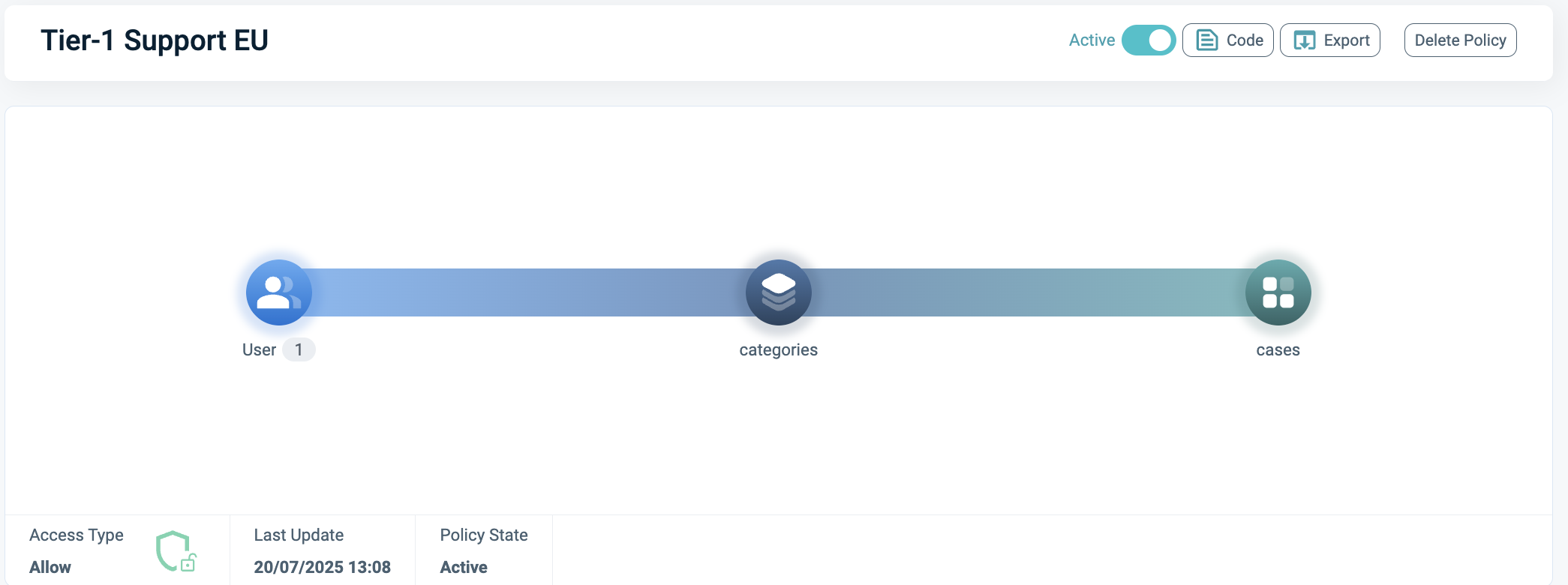

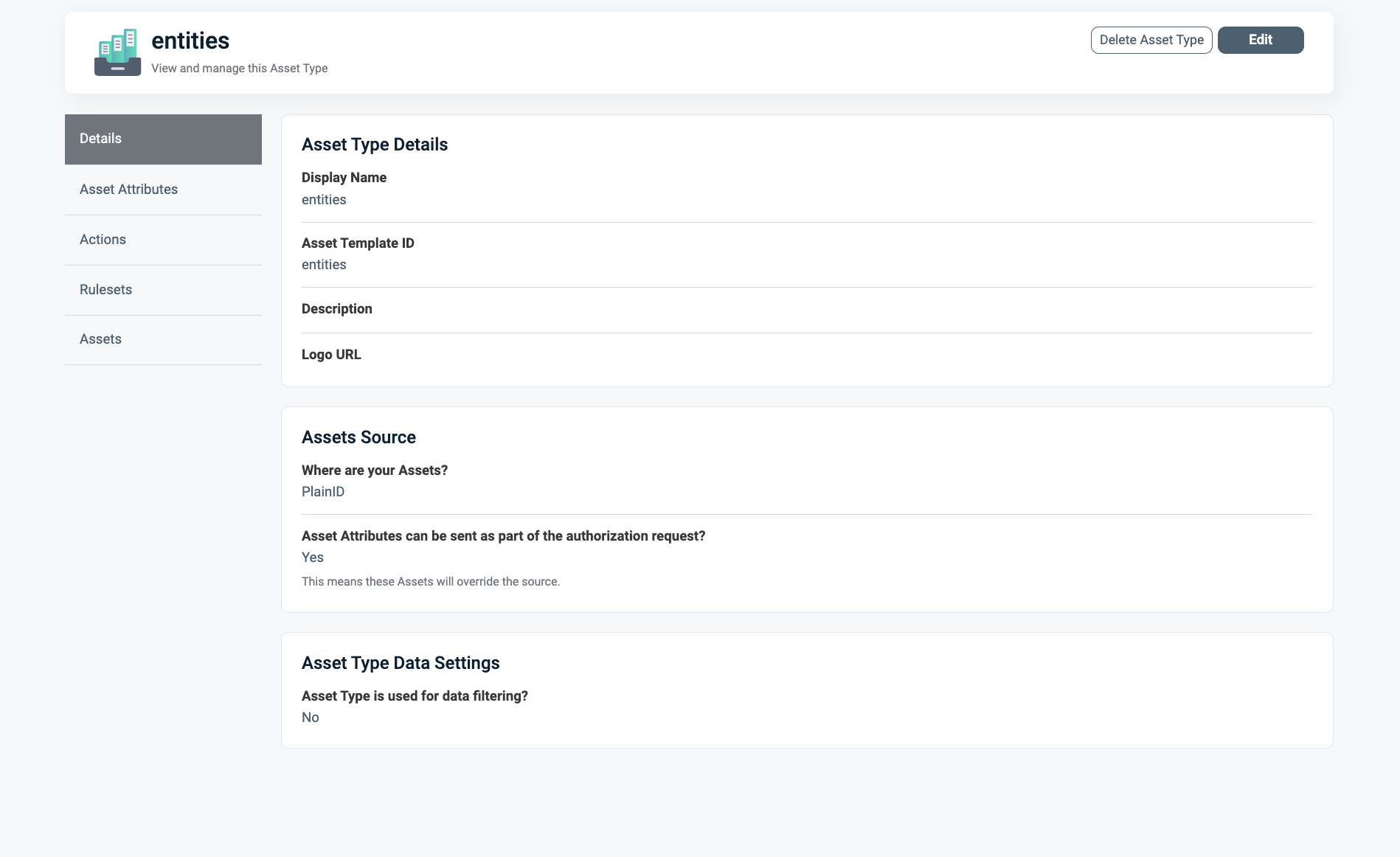

Create an Asset Type in PlainID to represent your documents, as shown in the image below.

-

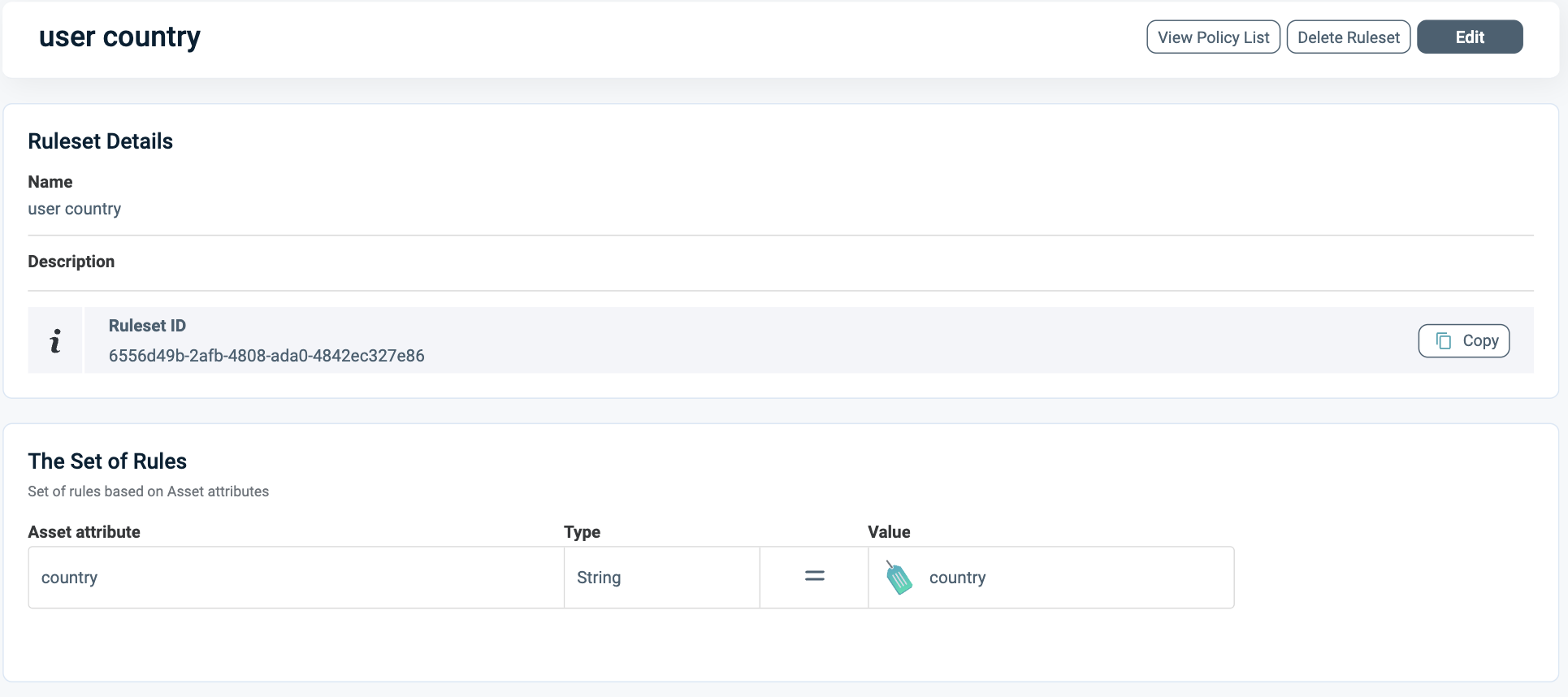

Ensure this Asset Type is marked for data filtering, and define the relevant Attribute for filtering, which is "country" in this example. Following this, create a rule set to enforce the access logic, as depicted below.

-

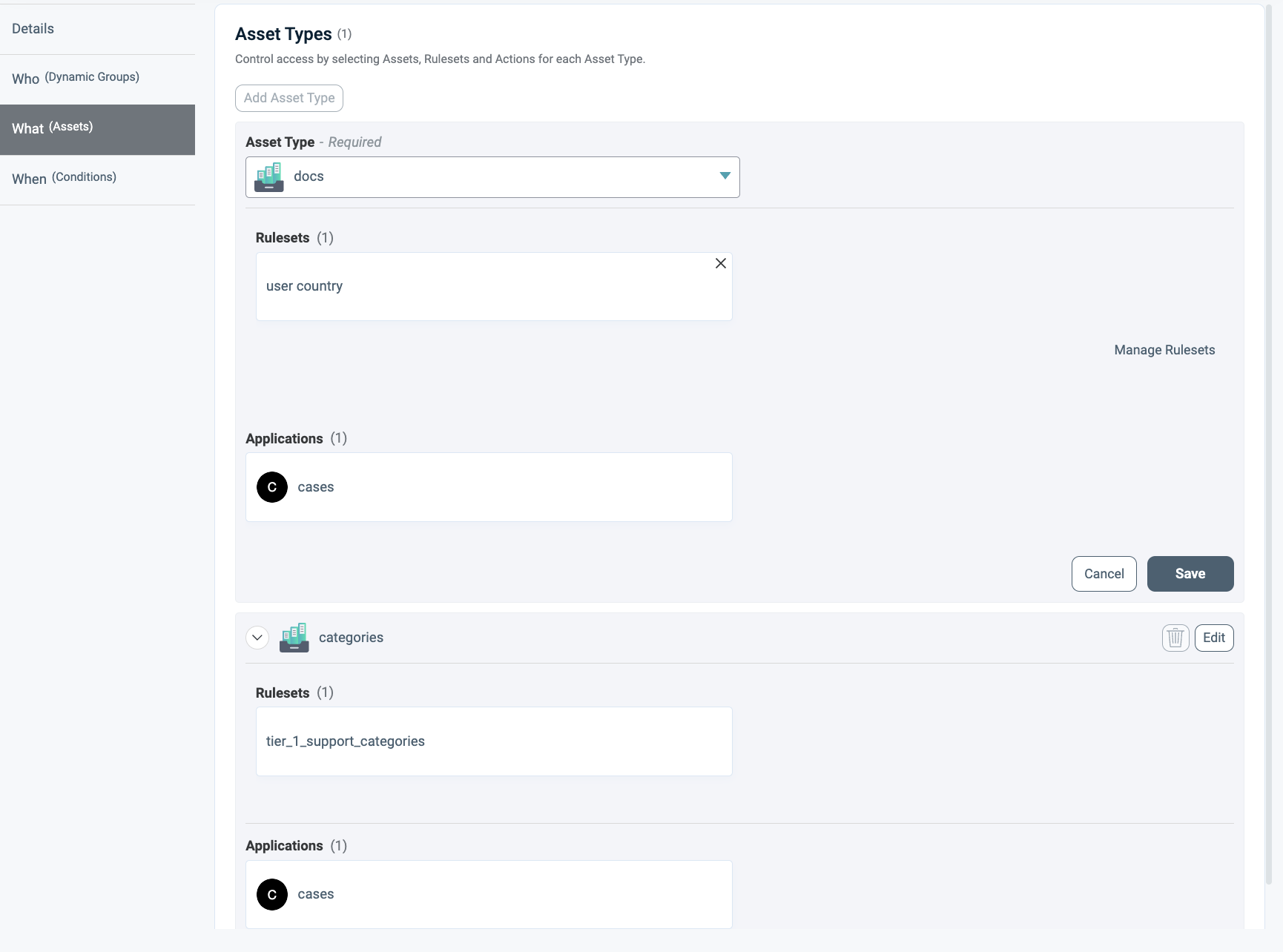

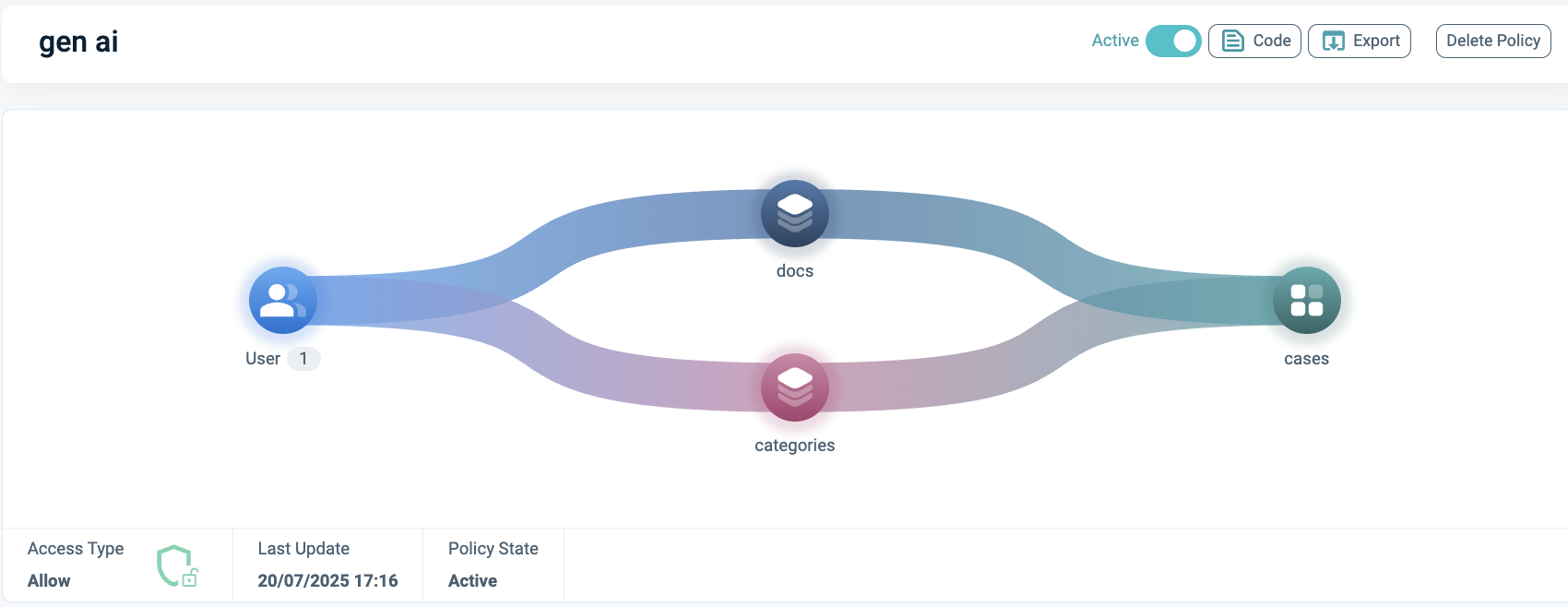

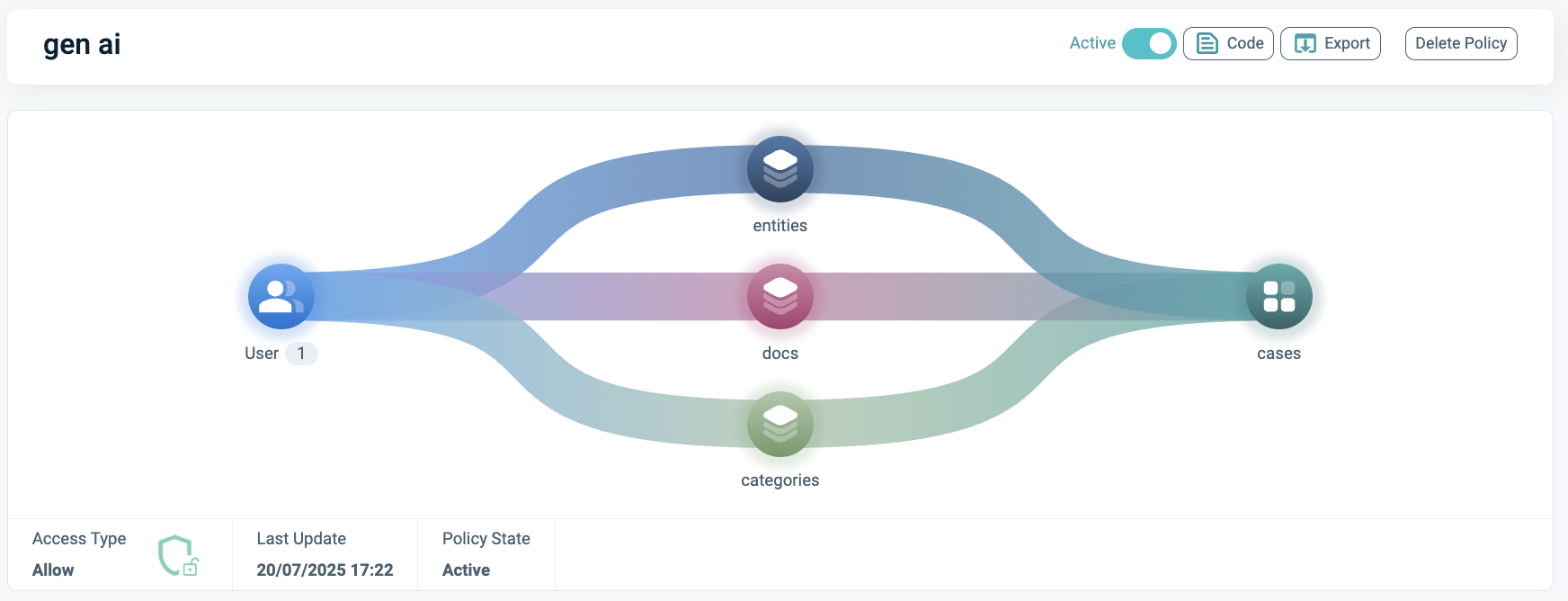

The final step involves attaching the new Asset Type to the Policy, as illustrated in these images.

Enforce Data Retrieval Filtering

Refer to the code block example below to understand how the the data retrieval filtering works:

from langchain_community.vectorstores import Chroma

from langchain_core.documents import Document

from langchain_core.embeddings import FakeEmbeddings

from langchain_plainid import PlainIDRetriever

from langchain_plainid.providers import PlainIDFilterProvider

# Example documents with country metadata

docs = [

Document(page_content="Stockholm login outage", metadata={"country": "Sweden", "reporter": "Mike Smith"}),

Document(page_content="Frequent MFA reset in Sweden", metadata={"country": "Sweden", "reporter": "Anna Lindström"}),

Document(page_content="NY login failure", metadata={"country": "US", "reporter": "John Miller"}),

Document(page_content="Helsinki session timeout", metadata={"country": "Finland", "reporter": "Sanna Virtanen"}),

]

vector_store = Chroma.from_documents(documents=docs, embedding=FakeEmbeddings())

filter_provider = PlainIDFilterProvider(

base_url="https://plainid.example.com",

client_id="plainid-client",

client_secret="plainid-client-secret",

entity_id="sara",

entity_type_id="User"

)

plainid_retriever = PlainIDRetriever(

vectorstore=vector_store,

filter_provider=filter_provider

)

filtered_docs = plainid_retriever.invoke(user_query) # Filters out unauthorized documents

Guardrail 3: Response Anonymization

Any sensitive data, such as PII, is automatically masked or encrypted based on Sara's Policy.

Policy Implementation for Anonymizer

-

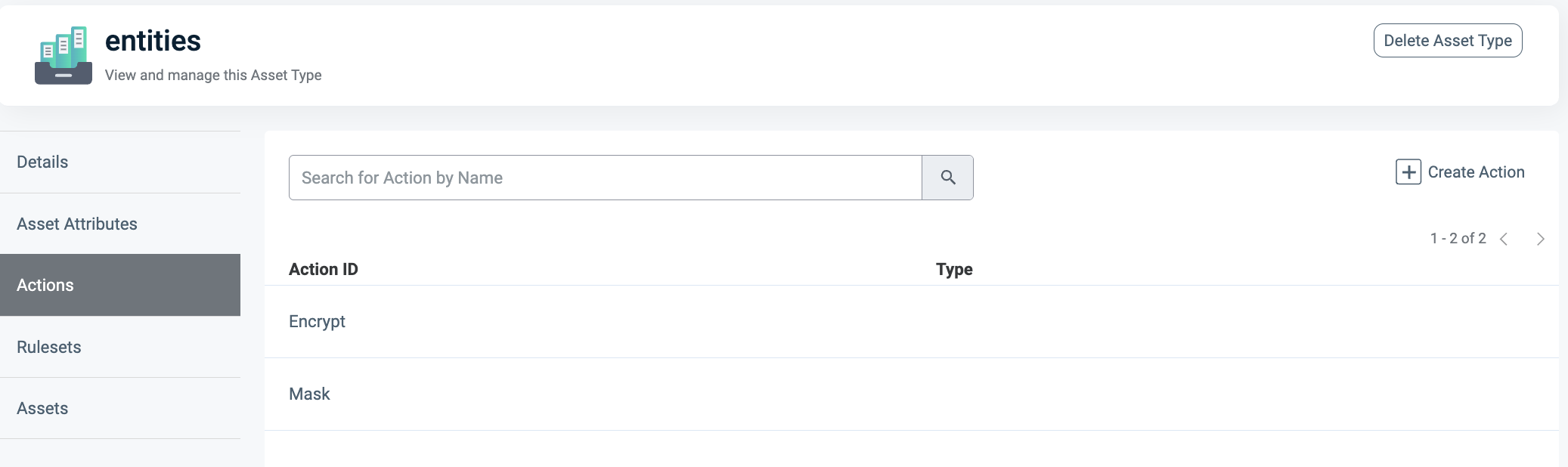

Create an entities Asset Type with the PII data, as depicted below.

-

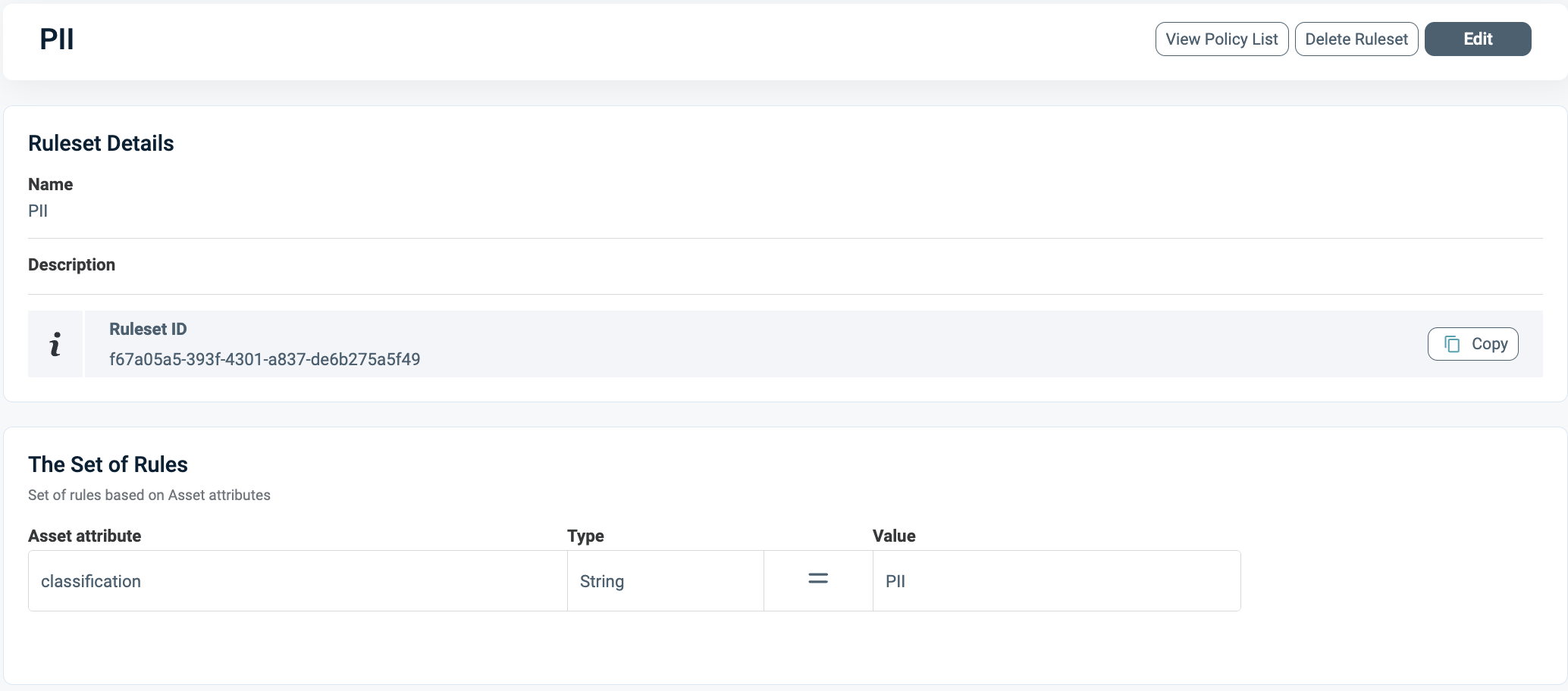

Define a rule set that determines which data is classified as PII; in this example, it's the reporter's name.

This Asset Type references a data catalog where the name is classified as PII.

-

Define actions to specify whether the final output should be encrypted or masked, as shown below.

-

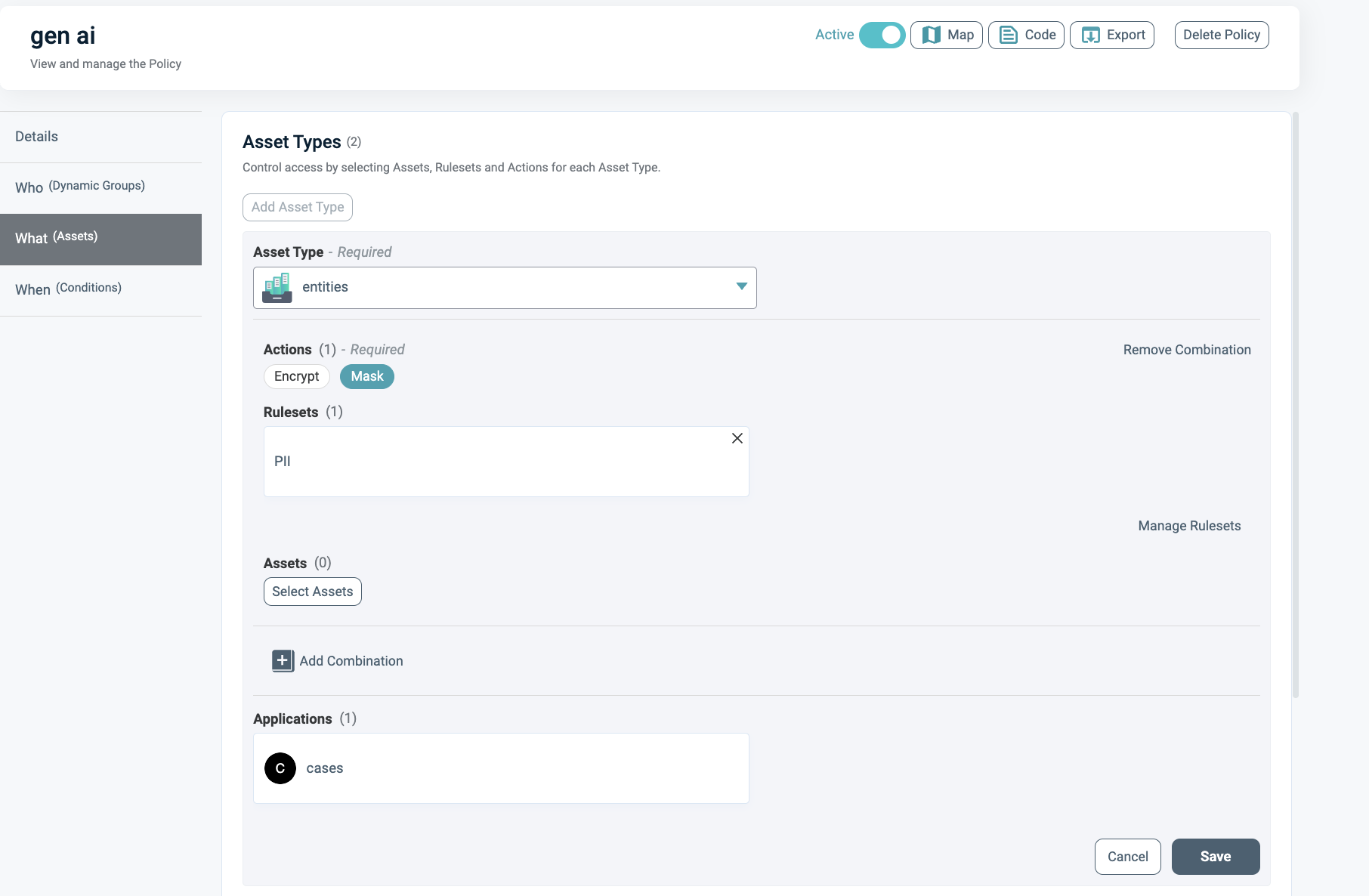

Attach the new Asset Type to a new Policy, including the classification rule set and the desired action.

Anonymize the Response

Anonymize the response using the code block below:

from langchain_plainid import PlainIDAnonymizer

plainid_anonymizer = PlainIDAnonymizer(

permissions_provider=permissions_provider,

encrypt_key="your_encryption_key" # Optional

)

result = plainid_anonymizer.invoke(user_query) # Masks PII (e.g., reporter names)

Outcome

PlainID enforces policies at every stage: blocking unauthorized prompts, restricting document access, and redacting sensitive data. This ensures Sara interacts with the AI system securely and within the defined scope of her role.